Making an Asset Management App Voice-Ready

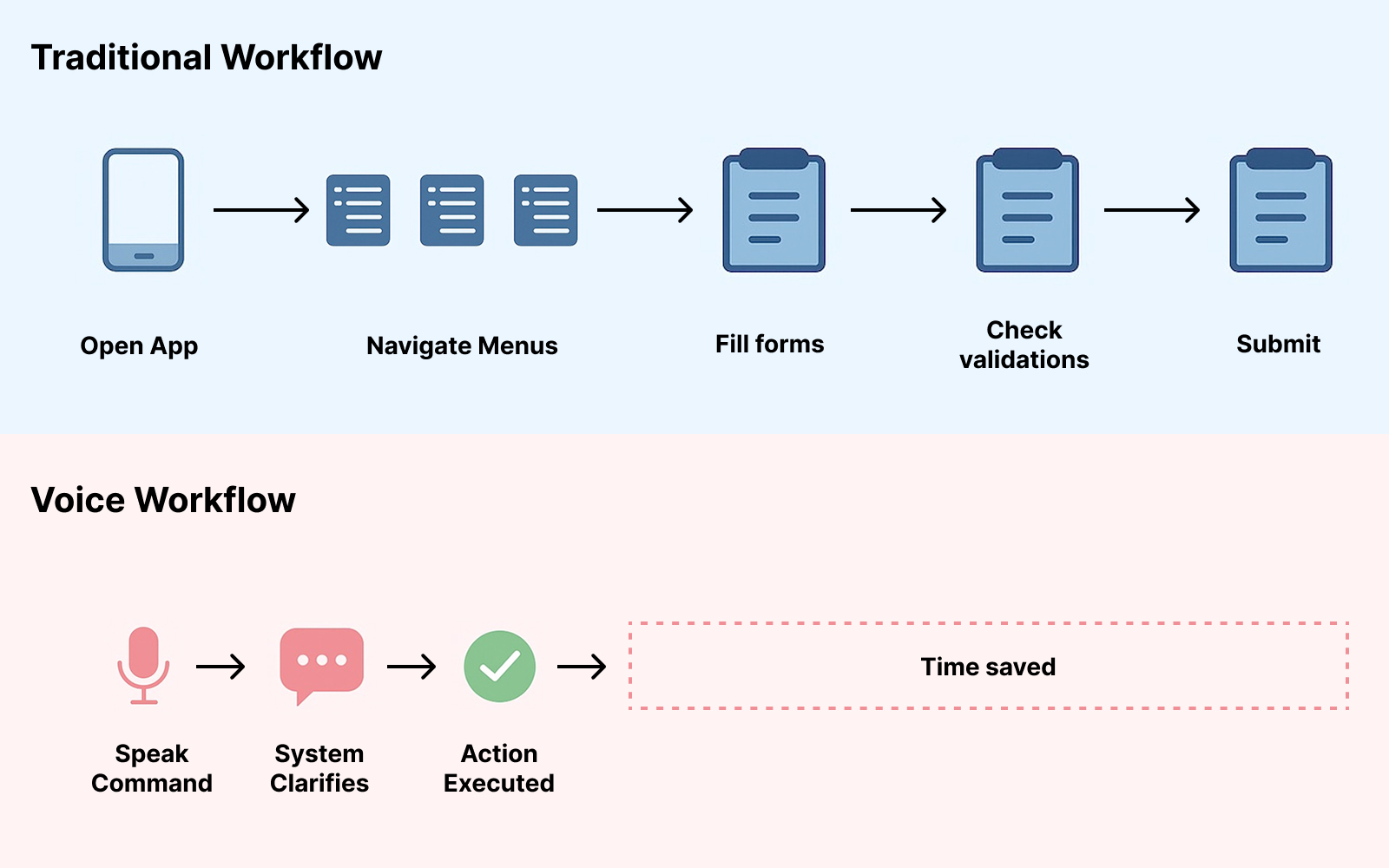

We added a voice layer to an asset management app, powered by GenAI. Users can now speak commands instead of tapping through multiple screens. The system interprets, clarifies, and executes actions, making the workflow faster and easier.

Built in just two weeks, the service is designed to plug into any mobile app with minimal effort.

Context

We recently worked on a project to make an existing asset management mobile app voice-enabled. The goal was simple: allow users to dictate instructions instead of navigating through multiple taps, forms, and menus.

This matters because in apps where completing a task involves several steps, reducing friction improves efficiency and usability.

Approach

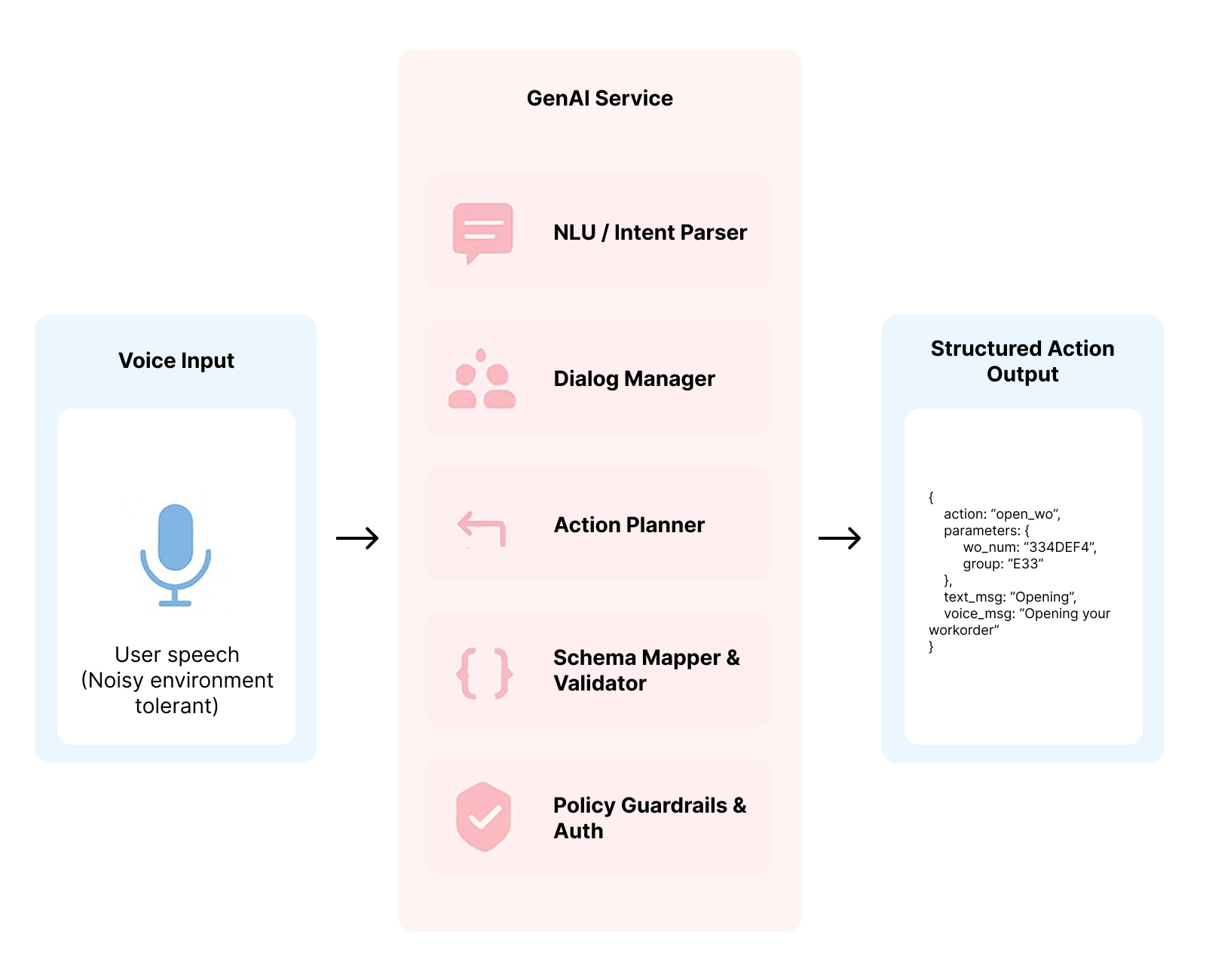

We built a GenAI-powered service that can:- Understand spoken user commands

- Ask clarifying questions if needed

- Confirm the action

- Generate structured responses that the mobile app can execute

The service was designed as a plug-in component. As long as the target app defines its possible actions and response structure, the voice layer can be integrated with minimal changes.

Outcome

- Time to delivery: 3 weeks (from idea to user-testing release)

- Ease of integration: The service is portable across mobile applications

- User experience: Tasks that previously took multiple taps can now be performed with a single spoken instruction